This article provides an overview of Kubernetes and related technologies and their relationship to enterprise application delivery.

Kubernetes Overview

Overall, the basic concept underpinning the Kubernetes product is a simple one; with the advent of containers and large distributed software delivery architectures, a system was needed to schedule and manage workloads being run in a containerized ecosystem. The problem with large, distributed, containerized applications is that they require a much more dynamic landscape than traditional applications; managing the application’s underlying infrastructure manually or using older orchestration techniques would be prohibitively difficult, especially if you want to reap the benefits touted by the containerization movement (portability, scalability, reuse, etc.). To meet this need, and to provide features needed to unlock the potential of massively distributed systems, Google released Kubernetes as an open source product in 2014.

What about OpenShift?

Openshift is a Kubernetes-based product provided by RedHat (now IBM) which adds a set of enterprise-friendly features on top of the standard Kubernetes components. To get a clearer picture of the Openshift/Kubernetes relationship, it’s best to start with the understanding that Kubernetes is less of a “product” and more of a “framework”; Kubernetes out of the box is a set of independent yet related components and services that require a fair amount of work to get set up and running. Openshift is a packaged Kubernetes distribution that simplifies the setup and operation of Kubernetes-based clusters while adding additional features not found in Kubernetes, including:

Openshift is a Kubernetes-based product provided by RedHat (now IBM) which adds a set of enterprise-friendly features on top of the standard Kubernetes components. To get a clearer picture of the Openshift/Kubernetes relationship, it’s best to start with the understanding that Kubernetes is less of a “product” and more of a “framework”; Kubernetes out of the box is a set of independent yet related components and services that require a fair amount of work to get set up and running. Openshift is a packaged Kubernetes distribution that simplifies the setup and operation of Kubernetes-based clusters while adding additional features not found in Kubernetes, including:

- A web-based administrative UI

- Built-in container registry

- Enterprise-grade security

- Internal log aggregation

- Built-in routing and load balancing

- Streamlined installation process

- Source control integration

These features make Openshift very attractive for enterprise Kubernetes implementations; as with most enterprise-oriented products, Openshift focuses heavily on features and processes that vastly simplify the out-of-the-box setup of the Kubernetes platform while maintaining a strong foundation for distributed application development and delivery, both from a security and stability standpoint.

It is worth noting that there are other Kubernetes based products or distributions that also focus on the enterprise; Amazon, Microsoft, and Google all have managed Kubernetes offerings, and VMWare, Rancher, Pivotal, and Docker all have on-premise (or hybrid) Kubernetes solutions.

General Kubernetes Concepts

There is a vast body of work available on the internal workings of the Kubernetes engine, so we will not dig into every component that’s a part of a Kubernetes cluster; instead, we will cover some of the most important parts and discuss how they interact to provide a framework to use when deploying distributed containerized applications.

The gross concepts that we will review include the following:

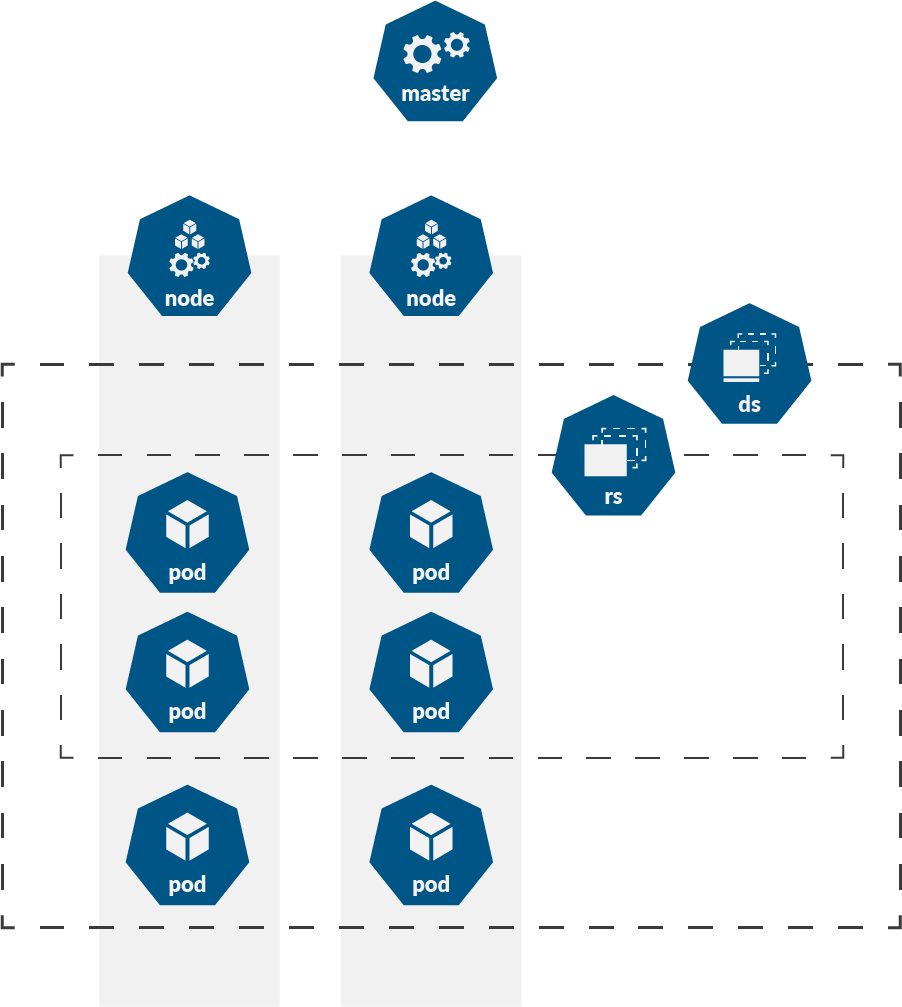

- Kubernetes Master – The Kubernetes Master is responsible for maintaining the overall state for your Kubernetes cluster(s). In general, the Master is a single node that runs a number of individual processes used to store, update, and verify the state of the individual components in a Kubernetes cluster, including all of the components mentioned below.

- Node – A Node represents a compute resource in the Kubernetes cluster; usually, this is either a VM running on top of a separate hypervisor or a bare metal server. Nodes are used to host the container runtime that runs the actual containers that compose your application.

- Container – A container is a single, atomic containerized application running in the container engine of choice; most frequently, this is Docker, though other container runtimes (such as rkt and containerd) are supported. In Kubernetes, individual container instances are deployed as Pods.

- Pod – A Pod is a deployable application unit in Kubernetes. It can consist of one or more containers, along with the storage/network resources, options, and configuration needed to run the application. Most frequently, a pod represents a single container being deployed in Kubernetes, but it can represent a set of multiple containers (when using the sidecar, ambassador, or adapter design patterns).

The pod is the smallest deployable unit in Kubernetes.

- ReplicaSet – A ReplicaSet represents a stable set of replica pods that Kubernetes should ensure is running at any given time. The ReplicaSet has a number of fields that determine how to manage the defined pods; creating, destroying, and taking ownership of pods as needed to fulfill its defined purpose.

- Deployment – A Deployment defines a set of declarative updates for Pods or ReplicaSets; it represents a desired state, and Kubernetes uses that to bring the existing environment state into line with desired state at a controlled rate. A Deployment is the highest-level abstraction of an application that exists in Kubernetes.

Note that these are not the sum total of the entities and components in a Kubernetes environment; however, they are enough to give a good illustration of how a Kubernetes environment functions.

Risks of Using Kubernetes

Given the overall complexity of the Kubernetes platform, it should come as no surprise that the risks involved in using it are largely tied up in the initial configuration and management of the platform itself. It is a complex system to get up and running, and once it is operational, it requires a fair amount of internal expertise to maintain and troubleshoot. These risks can all be partially or fully mitigated, generally by careful planning and execution, but they should all be taken into account when planning a Kubernetes implementation.

Risks During Initial Installation

The first major risk in planning out even a small Kubernetes rollout in your organization is that initial installation will be difficult. If you go with a manual rollout, not leveraging an enterprise vendor product such as Openshift or Rancher, there are a large number of moving parts that require a significant amount of configuration to get running in your environment. A secure, well-designed Kubernetes rollout requires a lot of moving parts, even for a limited implementation, and maintaining both stability and security can be a challenge even for a seasoned Kubernetes team. Using an enterprise vendor-provided Kubernetes product will largely alleviate this issue, but it is still critical to spend time up-front defining the required feature set and intended use for your Kubernetes rollout to avoid headaches later down the line.

Risks Springing From Ongoing Maintenance

Once a Kubernetes cluster is up and running, ongoing management and maintenance is fairly straightforward, though it does require that the team maintaining the cluster be familiar with Kubernetes concepts. When running an on-premises Kubernetes cluster, typical systems maintenance concepts like OS-level patching, software upgrades, and hardware maintenance all apply to your Kubernetes components, but the processes used to prepare for these events will be different. To give a basic example, if you’re patching a Kubernetes Node, you will want to safely drain all load from that node in advance to make certain that you maintain service-level objectives; this requires detailed knowledge about the way that Kubernetes behaves at the application level. Operations engineers who are unfamiliar with its internals will have problems handling even basic maintenance functions.

The risks inherent in ongoing maintenance of your Kubernetes environment can be mitigated through basic training, documentation, and developing new internal processes to support your Kubernetes environment and applications. Alternatively, you can use a cloud-based turnkey vendor for your Kubernetes implementations; the three main cloud services vendors all provide a managed Kubernetes service; Amazon with Elastic Container Service for Kubernetes (EKS), Microsoft Azure with Azure Kubernetes Service, and Google Cloud with Google Kubernetes Engine. All of these provide Kubernetes-as-a-service functionality that allow you to harness the power of Kubernetes without having to manage the complexity of installing and maintaining the control plane yourself.

Risks Associated with Internal Adoption

Managing the adoption of an enterprise Kubernetes implementation can also cause problems; the overall success of migrating to this type of platform will be heavily dependent on how well your development teams leverage the power and capabilities provided by the platform. Taking an existing monolithic application and shoehorning it into a Kubernetes cluster will likely result in a substandard experience; many of the benefits of container orchestration are only realized when the application being orchestrated is composed of microservices (or, at least, services with a small footprint that are independently scalable). It is critical that development teams understand the benefits of this type of architecture and plan out any development or migration project with the intent of leveraging those benefits for the good of the organization.

A secondary risk is that a Kubernetes adoption effort is too successful. Given that the orchestration layer resides on a VM or server-based set of compute, memory, and storage resources, it is limited by the bounds of these resource pools; pods that are resource-starved will be terminated by the scheduler. Specifying resource limits and resource requests at the container level can help optimize the use of these shared resources, but scaling the Kubernetes environment itself can become a concern depending on how many projects will be deployed to a cluster. This actually touches on a Kubernetes best practice (which we’ll go into more detail in a separate whitepaper): favor an increased number of clusters over increasing the size of existing clusters.

Maintaining a consistent rollout plan for your Kubernetes migration will help to mitigate both of these areas of risk; in addition, most of the major enterprise Kubernetes vendors provide tooling to help with the second risk. As with any transformational organizational initiative, careful planning and execution are critical to ensure the success of the effort.

Benefits of Kubernetes

The benefits of moving to a Kubernetes-based platform for your enterprise applications are significant; the level of automation and abstraction provided by the platform enables your organization to start developing and managing truly distributed applications using microservices without the associated maintenance costs and risks. Relying on distributed applications without an orchestration platform in place is a recipe for disaster; that being said, there are a number of benefits that the Kubernetes platform offers which make it extremely attractive as an orchestration solution.

1. Improved Software Delivery

Some of the most striking benefits that organizations realize from the use of containers and container orchestration are the drastic improvements to the software delivery process that using such a platform can deliver. The use of containers as a delivery mechanism for software applications immediately improves developer agility by empowering individual engineers to consistently run application instances wherever needed. This often removes a significant pain point for software delivery, and has the side benefit of ensuring application consistency from environment to environment. Kubernetes takes the advantages of containerization and multiplies them by wrapping container management in a powerful automation engine, making advanced delivery practices such as blue-green deployments and canary releases easy to execute.

2. Automation at Massive Scale

One of the main benefits of using any orchestration system is its ability to manage the things that the system is orchestrating without direct human intervention; Kubernetes is no exception. In the case of Kubernetes, what you are ultimately orchestrating are the individual components that make up your business applications; part and parcel of this orchestration is the ability to ensure that each component is behaving as expected, and the automation required to take corrective action when it’s not. To that end, Kubernetes provides the ability to automate each of the following:

- Multidimensional scaling of individual components to meet increased load requirements.

- Managing health checks on individual application components and self-healing or auto-replacing components that crash or are not behaving as expected.

- Dynamic load balancing and traffic shaping, providing powerful ways to distribute traffic to react to outages or bursty traffic.

- Distributed resource monitoring and tracing to allow you to track the operations of all of your containerized applications, even as you scale to hundreds and thousands of deployed containers.

3. Improved Resource Utilization

One of the key benefits of containerization has been the ability to make more effective use of available compute resources by allowing multiple containers to be run on a single host machine, sharing compute, OS, and network resources while maintaining application isolation. In addition, containers are much more lightweight than traditional virtualization solutions, making them the optimal package for application deployment. Kubernetes builds upon these strengths through its ability to easily create and migrate container instances from node to node, ensuring that all available resources are optimally utilized to handle application workload.

4. Market Dominance

One of the very striking things that will stand out when looking at Kubernetes in relation to the overall container orchestration market is that it has taken such an overwhelming lead in the space in such a short time. Despite the fact that it has a number of competitors, all of whom are comparable from a product maturity level, Kubernetes has become the de facto standard when looking at deploying applications using container technology. The reasons for this dominance are enough for a separate blog post, but they boil down to the fact that Kubernetes provides an excellent balance between functionality and flexibility, and the relatively open architecture of the platform has enabled vendors and individual contributors alike to improve and add on to the product with a minimum of overhead. When evaluating a platform that is intended to be pervasive throughout software delivery in your organization, it is critical that the solution is flexible enough to support all of your business needs while at the same time providing enough structure to ease the day-to-day requirements of rapid delivery and maintenance of your business applications. Kubernetes hits this sweet spot dead-on.

Roadmap for Kubernetes In Your Organization

The information presented in this whitepaper can help you understand the basics of Kubernetes and the risks and benefits associated with it, but what’s the next step?

1. Select an Implementation Target

Ideally, you should choose a relatively isolated, atomic business process or application to be the initial proving ground for your Kubernetes initiative. This type of implementation is best handled in an incremental fashion within your organization, and the best way to ensure success will be to target a business process that has certain intrinsic attributes which make it well-suited to be a Kubernetes-based application; these attributes include the following:

- The application or process is reasonably atomic, with well-defined boundaries.

- The application is easily containerized.

- The application is already built around microservices.

- The resource requirements for the application are well-understood.

- The overall business impact of the application is measurable.

- The application is not critical for business operations.

Note that these are not all specific to Kubernetes; a few of these would apply to any major platform migration. Risk management is a critical part of success in these types of watershed implementation efforts; choosing the right initial project can mean the difference between successful organizational transformation and failure.

2. Choose a Kubernetes Vendor Platform

For an enterprise, it just makes economic and practical sense to go with an Enterprise Kubernetes platform rather than rolling your own environment; the risks of the latter are just far too substantial, and chances are that as a business, you’re not looking to build Kubernetes Administration and Operations as a core business practice. The goal here is to enable your organization to deliver on their core business more reliably and effectively; as such, it’s a clear win to offload the operational and maintenance risks of running your Kubernetes platform onto a third party. There are a host of available vendors that provide Kubernetes-based products; in this whitepaper, we have mentioned Openshift, Rancher, AWS EKS, and Azure Kubernetes Service. The competitive landscape of the enterprise cloud native space is changing rapidly, so we strongly recommend a full competitive analysis focusing on your organizational needs; some considerations to take into account include:

Hosting; cloud-based, on-premises, or hybrid?

Hosting; cloud-based, on-premises, or hybrid?

Existing vendor relationships and/or services used.

Existing vendor relationships and/or services used.

Features needed (e.g. RBAC, Administrative UI, Active Directory integration).

Features needed (e.g. RBAC, Administrative UI, Active Directory integration).

Scaling requirements.

Scaling requirements.

And, of course…cost!

And, of course…cost!

3. Carefully Plan your Success Criteria

When embarking on a migration project that is intended to fundamentally change the way that you build and deliver your business applications, it is absolutely critical that the metrics that will determine the overall success of the effort be defined and well-understood at the outset. Any implementation of this type will by its very nature adapt over time; engineers will find more efficient processes to follow, developers will find new ways to streamline their work, and of course the business will experience changes to service-level objectives driven by adaptations to market conditions. Determining the objective measures by which the migration project can be termed a “success” will give a quantifiable way to establish progress throughout the course of the project and will give all stakeholders a clear picture of the overall goals of the project, both in the short and long term.

When determining the success criteria for an initial Kubernetes migration, we recommend taking the following considerations into account when outlining the metrics that will determine success:

- Initially, keep overall performance objectives modest; shoot for overall performance to be comparable to or slightly better than your existing applications.

- On the operational side, the objective should be to reduce overall delivery time to get features deployed. Focus metrics on objectives that support that goal.

- For development, the migration to Kubernetes should improve overall quality by homogenizing the delivery pipeline. This will require some cultural shift, though, as developers learn to take advantage of the benefits of containerization.

- For the business, the initial effects of a successful Kubernetes implementation will primarily impact overall reliability and flexibility; an orchestrated application should be more consistent in its overall performance and stability, even under unpredictable load.

Measures of the longer-term benefits of orchestration, such as increased operational agility, an accelerated software delivery cycle, and enhanced interactions with end-users should be tracked once the initial Kubernetes implementation has been deemed a success. Focus on the immediate goals of the application that you are migrating first, and then focus on the long-term benefits of the platform once that application is successfully running in an orchestrated manner.

4. Align with Corporate Culture

The overall goal of any Kubernetes implementation in the enterprise is to build a cloud-native platform that can scale up to fit the needs of the business across the entire organization. While you should always focus on the needs of the immediate project when running an implementation, it pays to keep in mind that Kubernetes should be an enterprise-wide tool. Pay close attention to any adjustments you wind up making to your operational strategy during project implementation, and make certain that your Kubernetes implementation closely aligns with your standard organizational processes and culture. Even though Kubernetes represents an evolutionary change in the way that we deliver and manage applications and business workloads, the overall success of a migration still depends on how well the organization can adapt to using the tooling (and how well the tooling can adapt to fit your organization!). Kubernetes was built to be exceedingly flexible; one of the reasons that it has seen such pervasive adoption in such a short time is precisely because while it does provide a solid structure to use when delivering next-generation applications, it is not opinionated when it comes to how those applications are built. This means that you can easily adapt it to fit your organizational needs, minimizing overall disruption and risk while at the same time adding the full power of container orchestration to your toolkit.

Summary

As distributed applications become more and more critical to successful business efforts, and the benefits of containerization and microservices to those efforts become more apparent, the Kubernetes container orchestration platform has become a de facto standard in how organizations deploy, manage, and scale their container-based distributed applications. Overall, the benefits of using Kubernetes are significant, but they come with an associated set of risks which must be managed; the enterprise Kubernetes ecosystem has evolved to meet precisely this need. Companies like IBM, Amazon, and Microsoft are aggressively working to bring enterprise-class features to the container orchestration market, and as a result, Kubernetes has become accessible for organizations regardless of their technical maturity. Even with the features and additions made by enterprise Kubernetes vendors, it is critical that modern organizations understand the implications of designing, delivering, and operating cloud-native applications; successful transformation into a distributed cloud-native organization requires careful planning and understanding in addition to successful implementation and migration efforts.

Are you interested in exploring how to bring the power and flexibility of Kubernetes into your organization? XTIVIA can help! With a long history of enterprise application implementation coupled with cutting-edge cloud-native architectural and development experience, XTIVIA is the perfect partner to help you bring your enterprise to the cloud. Contact us today!