Over the last few decades, ETL testing has been gaining quite a bit of traction. Especially given the emergence of Agile, DevOps, artificial intelligence, and cloud technologies as top trends in the software development industry. The reason for that traction? The modern enterprise’s dependency on data.

The pervasiveness of data dependency calls for the right testing strategy, planning, and execution to ensure that the data gets managed, transformed, and loaded accurately. That’s why ETL testing is essential and why we’d like to extrapolate on the topic, from the basic concept to its inner workings.

What is ETL?

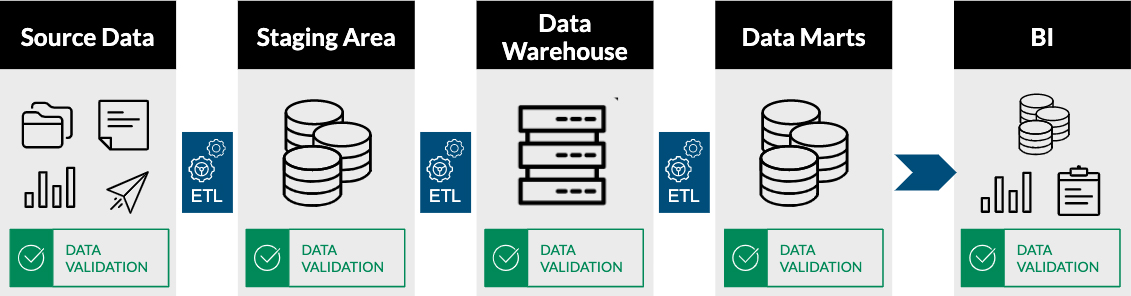

First off, ETL stands for extract, transform, and load. That is, extracting data from different source systems, transforming into one format, and loading it into a data warehouse and data marts. As you can see depicted in the diagram below, there are multiple layers of data transformation.

During the extraction process, data is obtained from different source systems and loaded into a staging area. Once in the staging area, transformation occurs, and then the data is loaded into a Data Warehouse. Business users can run reports from the Data Warehouse to analyze the data and use it for business insight and decision-making.

Data Marts are at the next level, usually divided into different subject areas, such as finance, HR, accounting, customer data, or product information, to name a few examples. Finally, Business Intelligence (BI) applications come into the picture to consume analytical reporting data.

ETL Example

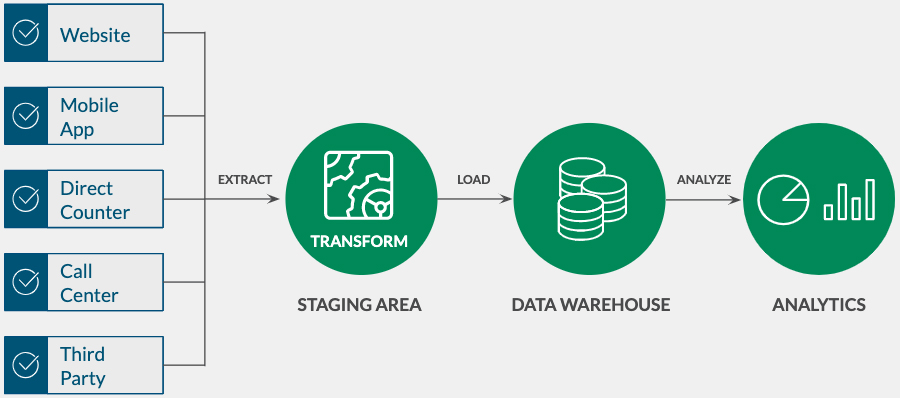

One of our clients — the largest fleet management firm globally — has reservations occurring through different source systems such as websites, mobile apps, direct counters, call centers, and third-party applications. The challenge they were facing was that each booking system had its database, its way of storing data, and its own format, making it difficult for the client to analyze the reservations and customer data.

The solution we implemented was to transform the data into a standard format and move into one database (i.e., the data warehouse), making data analysis much more accessible. Their new data consumption capabilities allowed them to streamline operations and effectively develop customer-centric strategies.

Given that example, let’s look at some of ETL’s other benefits to organizational efficiency.

Top Four Benefits of ETL

1) Data repository

The term “data repository” refers to a collection of all relevant data in one place. As a business moves through the ETL process, it’ll consolidate the data after transformation and load it into the data warehouse, as we mentioned above. This consolidation allows users to easily access all the data from one portal, which helps project and business teams spend more time performing data analysis and less time gathering the data. Thus, the data repository becomes a one-stop-shop for users to get the information they need efficiently and securely.

2) Data Consistency and Quality.

Regardless of industry, revenue, or market size, all organizations depend on data, and every organization relies on its data to produce useful information to fuel their decision-making. Unfortunately, when the data used is inaccurate or incomplete, it diminishes the data’s potential to progress business development, resulting in revenue loss.

Organizations need to determine the severity of data quality issues and their impact on the bottom line. Fortunately, the ETL process is designed to enhance data quality and data consistency by cleansing the data, removing duplicates, and removing redundant data.

3) Improved Query and System Performance

Data Warehouses are built and structured differently than the operational transaction system. Active transaction systems focus on creating and modifying data, while data warehouses are explicitly made for analysis and retrieval. The entire design of data warehouses is based on the idea of storing a large amount of data, but with the ability to accelerate data retrieval and analysis.

4) Improved Business Intelligence

One of the ETL process’s main objectives is to improve the BI framework. Business leadership no longer has to make decisions based on incomplete information, and decisions are founded upon data-driven facts and supported by relevant information. The leaders are also better informed about data queries and can retrieve information about their specific needs.

The business intelligence you gain can be directly applied to business processes like market segmentation, inventory management, financial management, and sales, to name a few examples. In our years of implementing ETL and BI practices, XTIVIA has seen numerous organizations significantly increase their efficiency and revenue.

The Importance of ETL Testing

Just as vital and valuable as ETL implementation is testing ETL solutions. One of the primary reasons ETL testing is so crucial is that it ensures that the data loaded to target systems is accurate across the board. While navigating through the overall ETL process, there are different data cleansing levels and transformations because data is being sourced from other databases and various formats. This increases the risk of data being lost or missed when consolidating the data into the data warehouse. By testing, we ensure the data loaded into the Data Warehouse is correct, none of it’s lost, and no duplicates are loaded.

We’re also ensuring that business transformations are correct. Testing will ensure the business transformations are developed and working correctly by confirming each modification mentioned in the requirement or mapping document.

Additionally, ETL testing gives us the reconciliation of data transfers. There are two phases of data transformation and loading. The first is bulk or initial load, where the entire data set is loaded. The second phase of data transformation is the incremental load where only the delta between the target and the source is loaded at a defined frequency. Depending on an organization’s needs, it can be hourly, daily, or weekly scheduled jobs. The testers will ensure the transfer of data in both initial and incremental phases have been loaded correctly.

Finally, ETL testing helps identify problems with the source and target systems. Right from the start, testers can point out issues before stacking the data in the data repository. They can also spot the discrepancies or ambiguities in business rules.

In summary, ETL Testing ensures data completeness, correctness, integrity, and quality. For an extensive explanation of the ETL testing process, click here.

ETL Testing Life Cycle

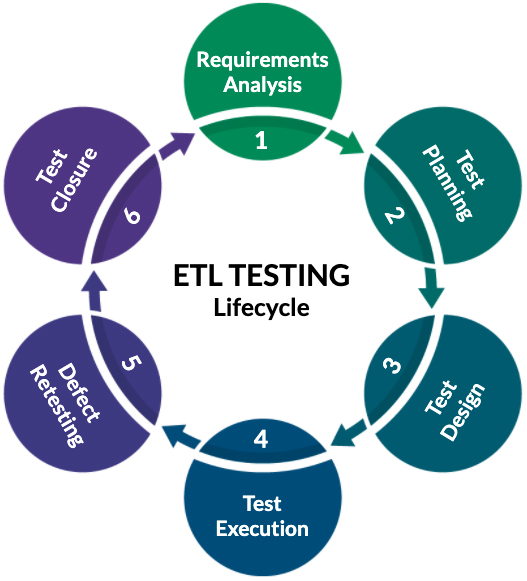

Like any other testing process, ETL testing also passes through various phases, as shown in the diagram below.

The first one is the requirement analysis. During this phase, the testing team will gather the required documents like mapping documents, data models, and information on the source and target systems. Once procured, they’ll start working on their analysis on all these details and reach out to business analysts for any clarifications.

The next phase is test planning, and this is where we strategize, plan out how we’ll design and execute our testing, and document it for reference. Within that documentation, it’s critical to include what’s in scope and out of range, testing entry criteria, exit criteria, risks, mitigations, and other relevant details needed to ensure a successful round of testing.

While designing the test, test cases and SQL queries are prepared, and the testing team analyzes the source data in conjunction with the mapping document. They’ll look at the data model’s different attributes, specifically the tables, columns, data types, and uncover any requirement-related defects.

Once designed, the next stage is test execution, wherein the actual testing occurs. Once the ETL jobs are run and complete, the testing team will start executing their test cases as per the mapping document, and all of the transformations will be tested for accuracy and effectiveness.

The next phase is defect retesting. During the test execution phase, the QA team pinpoints faults and assigns them to the development team to fix the issues. Once these defects are corrected, the dev team will rerun the jobs in the ETL tool to process the data, retest, and perform any necessary regression testing. If the retest is successful, issues will be closed. If not, they’ll be reopened for another round of evaluations.

The final stage is signing off the test activity. Once the test execution and retesting phases are complete without any outstanding defects, the QA team will sign off on testing.

ETL Data Validation Process

The next ETL activity we’d like to cover is the ETL Data Validation Process, which occurs in two stages. The first stage is the validation of the initial load. When the ETL logic is developed, it’s generally run on the entire data set to apply it. This is a vast data set that takes time to get processed and loaded. The testing team verifies transformations and data quality rules are applied correctly, as this initial load is a one time load.

The next stage is to validate the incremental load. This data is the delta between the source and target systems. Again, the testing team ensures transformations and data quality rules are applied to newly added data and existing data that has been updated.

Components of ETL Testing

There are five essential components of ETL Testing:

1. Source to Target Validation: A record count validation is performed to confirm that the data between source and target systems match.

2. Metadata Testing: Verify table definitions conform to the data model and application design specifications. In doing so, we perform data type, length, index, and constraint checks.

- We ensure that the proper data type and length are present in the target system for data type and length.

- We verify that the unique key and foreign key columns are indexed as per the requirement for index checks.

- An example of a constraint check verifies that the columns that cannot be null have the “NOT NULL” constraint.

- If you’re testing for a data warehouse, then dimensions and fact testing come into play as well. We validate that there’s a proper relationship between dimension and fact tables for dimensions and facts testing.

3. Data Transformation Testing: This process is for making sure we test the transformations as per the mapping document, ensuring all business rules and transformations are implemented correctly and loaded into the target systems.

4. Data Quality Testing: Data quality testing guarantees all data quality rules are applied accurately.

5. Integration Testing: Finally, we execute end-to-end testing to confirm the correct data flows between upstream and downstream systems.

Tools for ETL Testing

Shown below are some of the more popular tools used for ETL testing. Although we won’t be going into these tools’ intricacies and capabilities, please reach out with any questions you may have about them.

Prerequisites of ETL Testing

The first prerequisite of ETL Testing is to make sure we have a clear understanding of business needs and transformations. Before a tester starts working on any of their tasks or deliverables on ETL testing, it’s always advisable to have a solid end-to-end understanding of the business need and all the transformations to be developed.

Next is appropriate access to source and target systems. Raising the required access request as soon as possible in the testing lifecycle and conducting necessary follow-ups to get access on time is always recommended.

- If your role requires you to run and monitor ETL jobs, make sure you have the proper access to the ETL tools. This might include running the workflows, checking the log files, and watching the jobs. If your role doesn’t require you to access ETL tools for these purposes, make sure you have a contact point with the person in charge of this to help during testing.

Finally, the testing team needs to have the final mapping document handy while testing. Testing environments must be available and operational. This step is essential on any project, but especially on the ETL projects, where there’s a need to handle massive data with different formats. The testing team/lead needs to ensure the testbed is set up well before the testing execution starts and is set up correctly with all the necessary access in place.

ETL Testing Best Practices

To conclude our discussion, we’d like to cover some ETL Testing best practices.

- Understanding the implemented database design and data models is essential to successful ETL testing. This knowledge helps with understanding the relationships between the tables and data that is being tested.

- Make sure your documentation is comprehensive and complete. One way to ensure we stay in line with the changes that occur is to have regularly scheduled meetings to help understand all modifications to the mapping document.

- Get your business users involved in the testing process, and have SMEs available to address any questions or concerns.

- Always consider performance. In the cases where testers are involved in running and monitoring ETL jobs, we should always make sure we document the time needed for data load and raise appropriate flags when you see any delays or errors. These issues can be sent to the development team to analyze and fix.

- Finally, automate ETL testing as much as possible to make retest/regression testing easier and more efficient.

ETL testing is immensely important for any business trying to advance the utilization of its data and BI initiatives. Forgoing ETL testing will not only lend itself to dirty data and missed opportunities, but it can affect the overarching structure of business processes and leave users bogged down by inefficiencies. However, if you follow the methods and best practices outlined here, you’ll position yourself to make better business decisions and maximize your data’s potential.

If you have any questions regarding ETL and ETL testing, submit them in the comment section below, or reach out to us here.