This blog post covers the upgrade of an AWS EKS cluster that was created using a CloudFormation template. Note that this post covers upgrading the existing EKS cluster as-is without spinning up a new AutoScaling group.

Considerations when updating

One important thing to take under consideration when looking to upgrade a Kubernetes cluster is that Kubernetes can change drastically from version to version. I highly recommend that you test the behavior of your application against the upgraded Kubernetes version in a test or sandbox environment before performing the update on a production cluster.

In general, it is good practice to keep your EKS cluster up-to-date as newer versions of the Kubernetes engine become available. Newer versions usually incorporate performance and feature upgrades that help with overall performance and cost management. XTIVIA strongly recommends building a continuous integration workflow to test your application behavior end-to-end before moving to a new Kubernetes version.

The update process consists of Amazon EKS launching new API server nodes with the updated Kubernetes version to replace the existing ones. EKS performs standard infrastructure and readiness health checks for network traffic on these new nodes to verify that they are working as expected. If any of these checks fail, EKS reverts the infrastructure deployment, and your cluster remains on the prior Kubernetes version. Running applications are not affected, and your cluster is never left in a non-deterministic or unrecoverable state. Amazon EKS regularly backs up all managed clusters, and mechanisms exist to recover clusters if necessary.

Keep in mind that Amazon EKS requires 2-3 free IP addresses from the subnets which were provided when you created the cluster in order to successfully upgrade the cluster. If these subnets do not have available IP addresses, then the upgrade can fail. Additionally, if any of the subnets or security groups that were provided during cluster creation have been deleted, the cluster upgrade process can fail.

Upgrading the control plane

Upgrading the EKS cluster control plane is relatively simple. When new updates are available, Amazon allows Administrators to upgrade the control plane from either the user interface or from the CLI.

For using the CLI, there are three EKS API operations to enable cluster updates:

The UpdateClusterVersion operation can be used through the EKS CLI to start a cluster update between Kubernetes minor versions:

aws eks update-cluster-version --name Your-EKS-Cluster --kubernetes-version 1.14You only need to pass in a cluster name and the desired Kubernetes version. You do not need to pick a specific patch version for Kubernetes. We pick patch versions that are stable and well-tested. This CLI command returns an “update” API object with several important pieces of information:

{

"update" : {

"updateId" : UUID,

"updateStatus" : PENDING,

"updateType" : VERSION-UPDATE

"createdAt" : Timestamp

}

}This update object lets you track the status of your requested modification to your cluster. This can show you if there was an error due to a misconfiguration on your cluster and if the update in progress, completed, or failed.

You can also list and describe the status of the update independently, using the following operations:

aws eks list-updates --name Your-EKS-ClusterThis returns the in-flight updates for your cluster:

{

"updates" : {

"UUID-1",

"UUID-2"

},

"nextToken" : null

}Finally, you can also describe a particular update to see details about the update’s status:

describe-update --name Your-EKS-Cluster --update-id UUIDaws eks This returns a JSON response containing the current status of an update operation.

{

"update" : {

"updateId" : UUID,

"updateStatus" : FAILED,

"updateType" : VERSION-UPDATE

"createdAt" : Timestamp

"error": {

"errorCode" : DependentResourceNotFound

"errorMessage" : The Role used for creating the cluster is deleted.

"resources" : ["aws:iam:arn:role"]

}

}Reference table

The following table captures the version numbers for various components of the EKS infrastructure. Note the versions listed in the table, these versions will be used in the subsequent sections.

| Kubernetes Version | 1.14 | 1.13 | 1.12 |

| Amazon VPC CNI plug-in | 1.5.5 | 1.5.5 | 1.5.5 |

| DNS (CoreDNS) | 1.6.6 | 1.6.6 | 1.6.6 |

| KubeProxy | 1.14.9 | 1.13.12 | 1.12.10 |

Upgrading EKS nodes

This section captures the upgrade process for the worker nodes in the EKS environment. For each worker node in the EKS environment, after every worker node is updated, the upgrade process waits for 5 minutes before kick-starting the upgrade process for the next worker node. Note that the upgrade process is a rolling upgrade. Prior to upgrading the worker nodes, multiple other pieces of the EKS infrastructure have to be upgraded for a successful upgrade of the environment.

Patching kubeproxy

Kubeproxy application has to be upgraded to the latest supported version.

kubectl set image daemonset.apps/kube-proxy \

-n kube-system \

kube-proxy=602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/kube-proxy:v$KubeProxyVersionUpgrade the Cluster DNS provider

The cluster DNS provider has to be determined prior to the next step with the upgrade.

kubectl get deployments -l k8s-app=kube-dns -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

coredns 2/2 2 2 44d

Based on the output, we’re running CoreDNS.

Note: This cluster is using CoreDNS for DNS resolution, but your cluster may return kube-dns instead. Substitute coredns for kube-dns if your previous command output returned that instead.

Before upgrading CoreDNS, the CoreDNS replicas have to be scaled up to prevent outage. Prior to the scale-up, check if there is any extraneous configuration incompatible with the newer version that needs to be removed from the configuration. Note that without validation, if there is anything wrong with the configuration, DNS lookups in the cluster will fail causing a complete outage for any external DNS lookups in the Kubernetes cluster.

kubectl edit cm coredns -n kube-system

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

#

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"Corefile":".:53 {\n errors\n health\n kubernetes cluster.local in-addr.arpa ip6.arpa {\n pods insecure\n upstream\n fallthrough in-addr.arpa ip6.arpa\n }\n prometheus :9153\n proxy . /etc/resolv.conf\n cache 30\n loop\n reload\n loadbalance\n}\n"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"eks.amazonaws.com/component":"coredns","k8s-app":"kube-dns"},"name":"coredns","namespace":"kube-system"}}

creationTimestamp: "2019-04-15T17:42:25Z"

labels:

eks.amazonaws.com/component: coredns

k8s-app: kube-dns

name: coredns

namespace: kube-system

resourceVersion: "189"

selfLink: /api/v1/namespaces/kube-system/configmaps/coredns

uid: d4b63699-5fa5-11e9-9a27-0e4f4257637c

Scale-up to two replicas if the number of replicas is less than two to avoid outage:

kubectl scale deployments/coredns --replicas=2 -n kube-systemDetermine the current version of CoreDNS

kubectl describe deployment coredns --namespace kube-system | grep Image | cut -d "/" -f 3

> coredns:v1.3.1

If the version of CoreDNS is older than the version of CoreDNS required for the version of EKS you’re upgrading to, patch CoreDNS as required.

kubectl set image --namespace kube-system deployment.apps/coredns \

coredns=602401143452.dkr.ecr.us-west-2.amazonaws.com/eks/coredns:v$CoreDNSVersionCheckpoint: Verify that CoreDNS pods are running.

Patch VPC CNI with the latest version

Check the version of your cluster’s Amazon VPC CNI Plugin for Kubernetes

kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

> amazon-k8s-cni:v1.5.5

If the environment is running on version less than 1.5.x, patch CNI with the following command:

kubectl apply -f https://raw.githubusercontent.com/aws/amazon-vpc-cni-k8s/release-1.5/config/v1.5/aws-k8s-cni.yamlScale-down Kubernetes Cluster AutoScaler

If you have Kubernetes Cluster AutoScaler deployed, it is very important to scale-down the AutoScaler application to prevent any AutoScaling operations that will interfere with the worker nodes upgrade. Note that if AutoScaling operations occur during the worker nodes upgrade, the upgrade process will roll back.

kubectl scale deployments/cluster-autoscaler --replicas=0 -n kube-systemUpgrade Nodes

Launch AWS Console

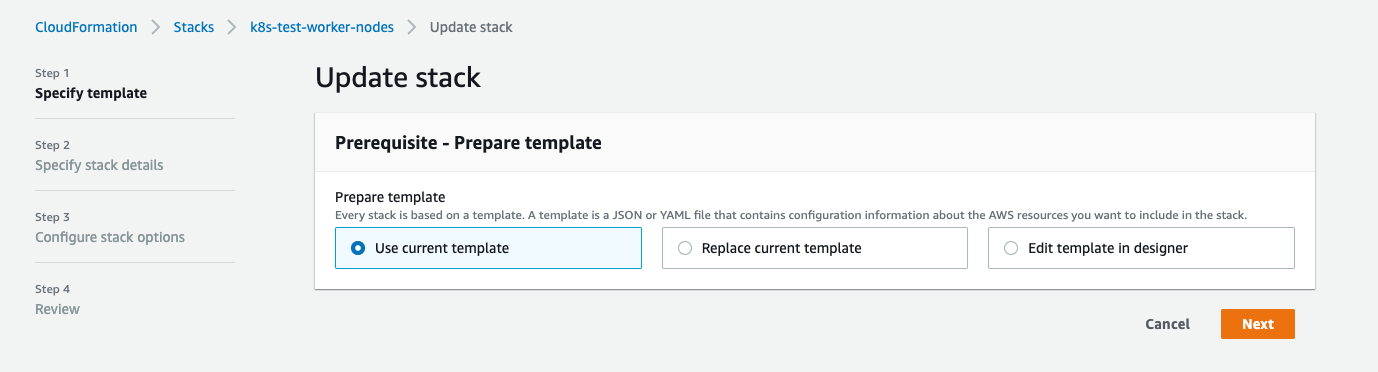

Open the AWS CloudFormation console at https://console.aws.amazon.com/cloudformation.

Select the node group to upgrade

Select the right worker node group stack, and then choose Update.

Replace the current template

Select Replace current template and select the Amazon S3 URL

https://amazon-eks.s3-us-west-2.amazonaws.com/cloudformation/2019-11-15/amazon-eks-nodegroup.yaml

Specify stack details

On the Specify stack details page, fill out the following parameters, and choose Next:

- NodeAutoScalingGroupDesiredCapacity – (Has to be one more than the current Node size)

- NodeAutoScalingGroupMaxSize – (Has to be at least one more than the current Node size)

- NodeInstanceType – Match the Instance type with the current EC2 instance type. For example: m5.2xlarge

- NodeImageIdSSMParam – The Amazon EC2 Systems Manager parameter of the AMI ID that you want to update to. The following value uses the latest Amazon EKS-optimized AMI for Kubernetes version 1.14.: /aws/service/eks/optimized-ami/1.14/amazon-linux-2/recommended/image_id

- NodeImageId – Leave blank (Note the current ImageID prior to the upgrade for rollback if needed)

Review data

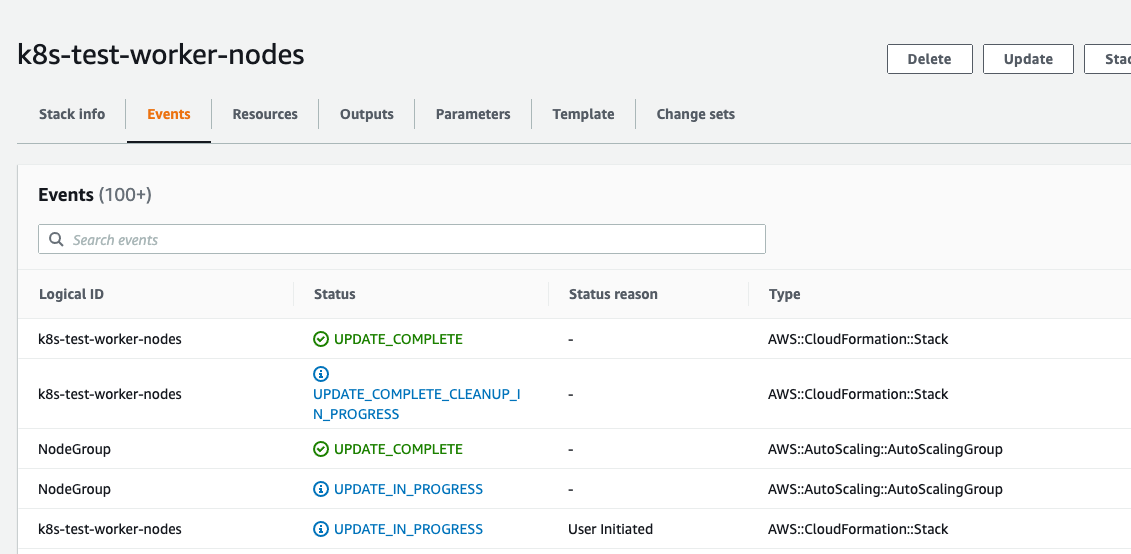

On the Review page, review the stack configuration, acknowledge that the stack might create IAM resources, and then choose Update stack.

Scale-up cluster autoscaler

After the upgrade is complete, scale-up the cluster autoscaler

kubectl scale deployments/cluster-autoscaler --replicas=1 -n kube-systemMonitor the upgrade process

Monitor the upgrade process and wait for the confirmation on the CloudFormation console that the upgrade is complete.

Summary

Keeping your EKS cluster running with the latest version of Kubernetes is important for optimum performance and functionality. However, there are many components that need to be upgraded outside of the control plane for a successful upgrade of the EKS cluster. While multiple Kubernetes platforms provide you with tools to help make the upgrade easier, you need to know the components to consider while upgrading your Kubernetes environment. With our Kubernetes expertise, we can help make the upgrade seamless with no outage to the consumers of Kubernetes services.

If you have questions on how you can best leverage Kubernetes help with your Kubernetes implementation, please engage with us via comments on this blog post, or reach out to us here.

Additional Reading

You can also continue to explore Kubernetes by checking out Kubernetes, OpenShift, and the Cloud Native Enterprise blog post, or Demystifying Docker and Kubernetes. You can reach out to us to plan for your Kubernetes implementation or AWS related posts such as DNS Forwarding in Route 53.