Capturing changes from the data sources for implementing an incremental data load is a common requirement in data engineering pipelines. One of the cool features of Azure Data Factory is CDC (Change Data Capture). This can be implemented using various options available in mapping data flow or Change Data Capture factory resource.

Mapping Data Flow

We can graphically design the data flows and transformations using the mapping data flows. Moreover, mapping data flow allows us to model the data transformations without writing code.

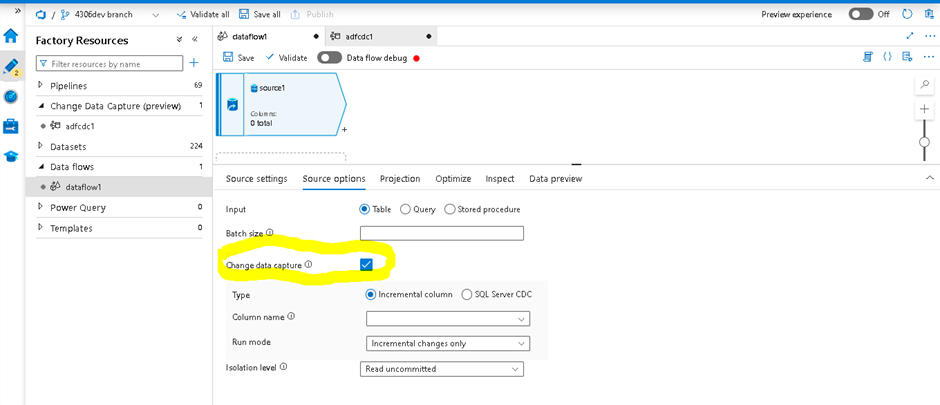

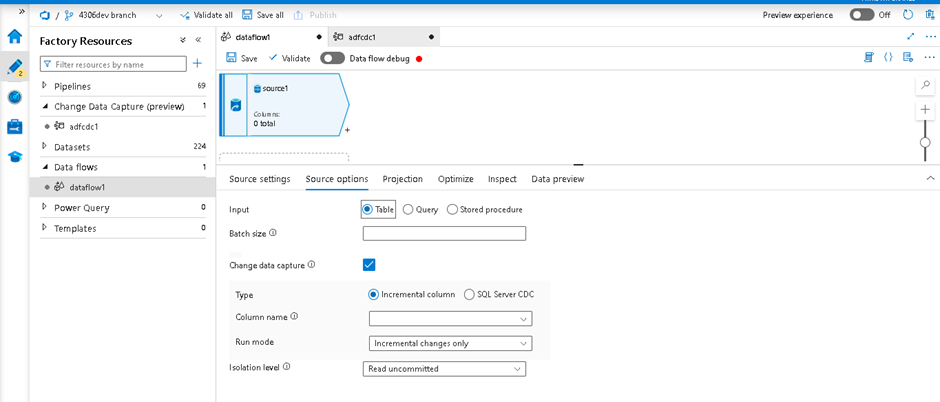

To implement Change Data Capture, first, create a new mapping data flow and select the source, as shown in the screenshot below. Once we choose the source dataset, if we go to Source Options, we have the Change Data Capture checkbox, as highlighted in the screenshot below.

We have two options within this. One is an incremental column, and the other is a database-maintained change log-based approach.

Incremental Column:

Changed records would be automatically detected by data flows using this incremental Column approach. This applies to files as well. Changed files would be detected using the last modified timestamp. We must select a column that would be used for identifying changes (an incremental column) when we want to extract delta data.

We must select a column that would be used for identifying changes (an incremental column) when we want to extract delta data.

We can observe both options for Change Data Capture from the screenshot below. For incremental data extraction, we have to provide the column name we want to use for identifying changes. As of this writing, Azure Data Factory supports the following sources for this option.

- Azure Blob Storage

- ADLS Gen2

- ADLS Gen1

- Azure SQL Database

- SQL Server

- Azure SQL Managed Instance

- Azure Database for MySQL

- Azure Database for PostgreSQL

- Common data model

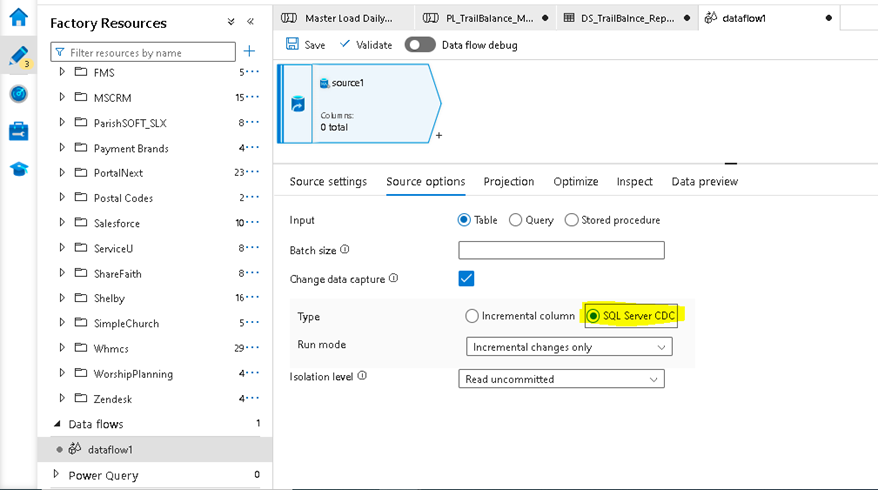

Database-maintained Change Log:

Another option for implementing the CDC is using the native change data capture technology in the databases. This option does not require any column to identify changes. Instead, it uses the internal change log maintained by the database (a log-based CDC at the source database/table level).

In this option, we only need to enable the SQL Server CDC and select the target table in sink transformation. Then, automatically changes from the source table would be transmitted to the target table. Azure Data Factory currently supports the following sources for database change log-based CDC.

- Azure SQL Database

- SQL Server

- Azure SQL Managed Instance

- Azure Cosmos DB (SQL API)

- SAP CDC

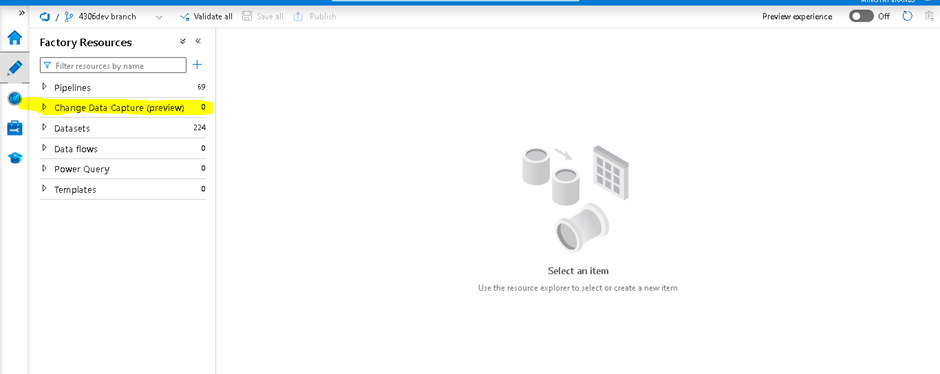

Change Data Capture Factory Resource

Microsoft added a new feature to Azure Data Factory to capture change data between source and target.

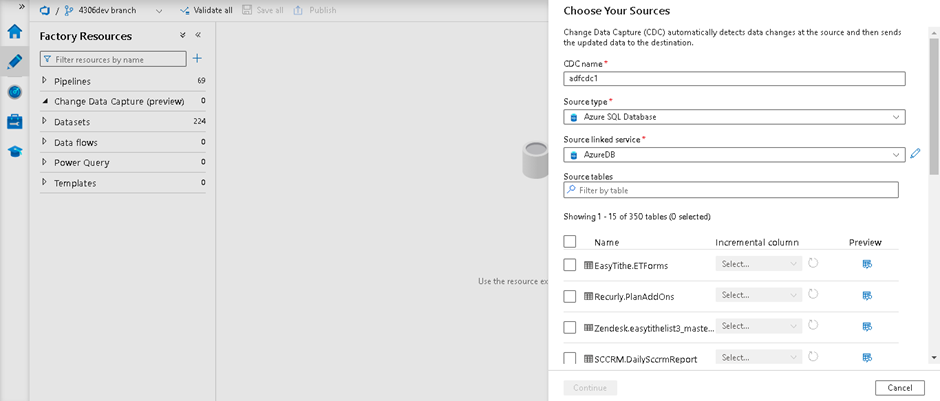

If we observe, there is a Change Data Capture option below the Pipelines option. All we have to do is click on the above option, and it will navigate to the new CDC page, as shown in the screenshot below.

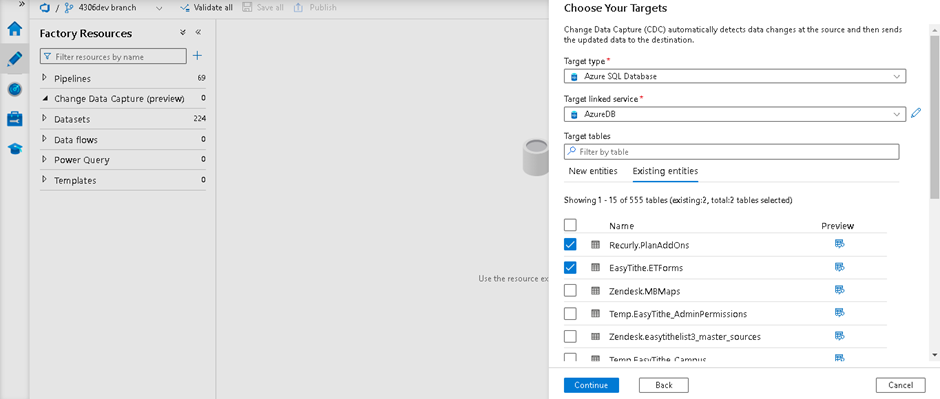

After filling in the CDC name and selecting the Source type, we must choose the linked service for connecting to the source. We would see a list of source tables to choose from. But this uses an incremental column to perform Change Data Capture – a date column from the source table. After selecting the tables and incremental columns, we must click on continue. It would navigate to the target options page. Here we must choose the target database, target linked service, and target tables corresponding to the selected source tables.

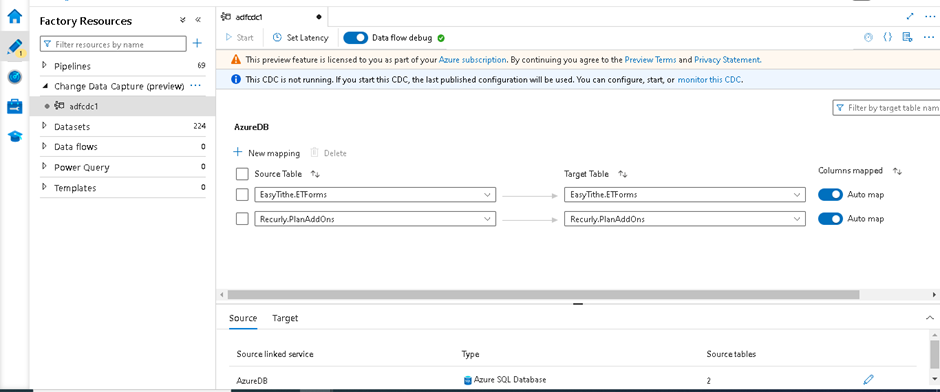

After that, clicking Continue will take us to the mapping page with a Run option to start CDC flow. Once we start this, changes will automatically be pushed from source to target until we stop that option.

Note: This option is still in preview mode in the Azure Data Factory as of this writing. According to Microsoft, we will be charged only for the duration of the CDC run.

If you have any questions about this blog or need help with Azure Data Engineering, please contact us.