In today’s digital transformation era, development teams are being assigned a dual mandate of meeting customers’ changing dynamics with faster development cycles. While the speed factor was always there with a monolithic codebase, applications can be deployed rapidly, sometimes once a month or even once a week. However, aligning your enterprise with the changing dynamics and agility cannot be achieved with monolithic applications. It is possible to achieve greater delivery speed by breaking up the application into small building blocks. Each codebase with its independent workflow, governance, and delivery pipeline — welcome to the World of Microservices.

Fundamental problems with Microservices:

Inter-Service Communication:

We can successfully break up the application into small building blocks or (Micro)services and deploy it in a distributed enterprise. These microservices communicate or chatter significantly over the network. When we add a network dependency to the application logic, we introduce a whole set of potential hazards that grow proportionally with the number of connections the application depends on.

Kubernetes, as a container orchestration platform, has tried to address this issue by managing the microservices running as Pods across a network of Kubernetes clusters. But the problem of inter-service communication such as service to service networking and security challenges persists. However, this issue is resolved with the advent of Service Mesh.

Introduction to Service Mesh:

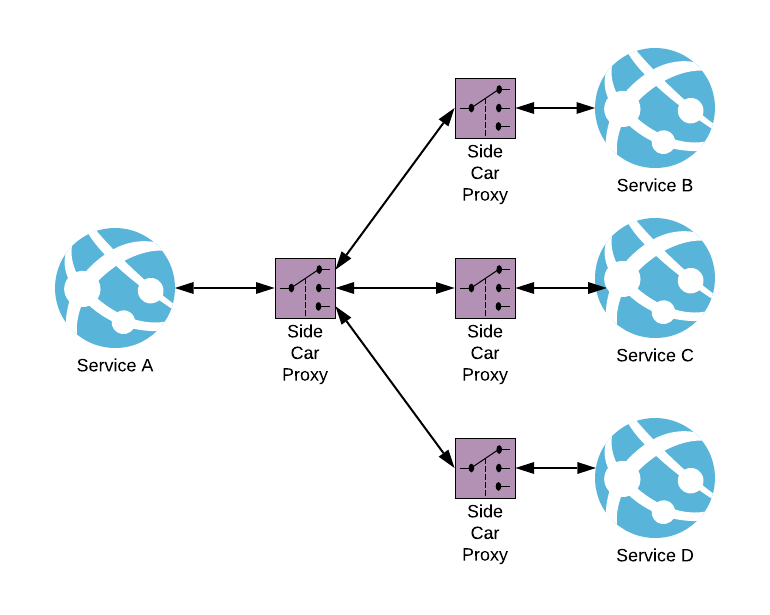

A service mesh is a set of configurable proxies with built-in capabilities to handle inter-service communication and resiliency through configuration. It solves the networking and security challenges of operating microservices and moves them into the service mesh layer. Communication is abstracted away from microservices using sidecar proxies to establish TLS (Transport Layer Security) connections for inbound and outbound links.

A key component of Service Mesh is the Sidecar proxy (a reference to the motorcycle sidecar). Sidecar is a design pattern attached to the microservice and provides peripheral tasks such as platform abstraction, proxying to remote services, configurations, and logging. By conducting these tasks, the Sidecar enables the microservice developers to focus on the core business functionality while abstracting away the peripheral tasks.

Various Service Mesh offerings:

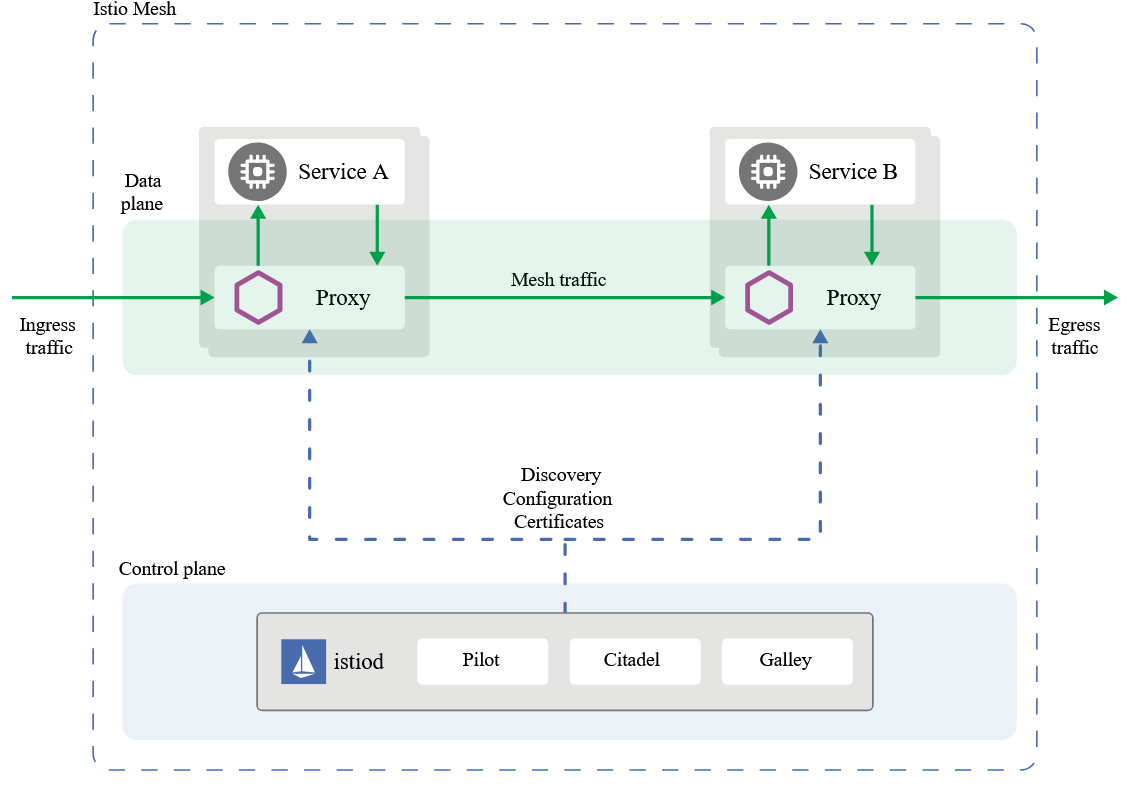

1) Istio: Istio is a Service mesh technology jointly developed by IBM and Google. Istio.io

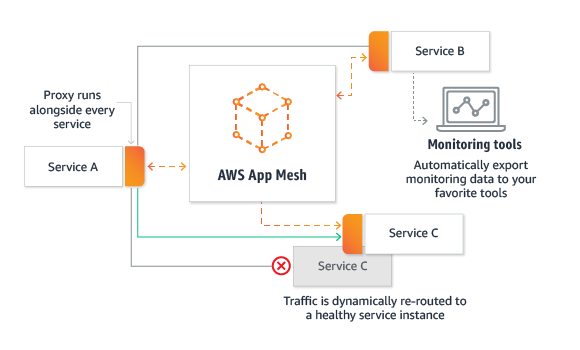

2) AWS AppMesh

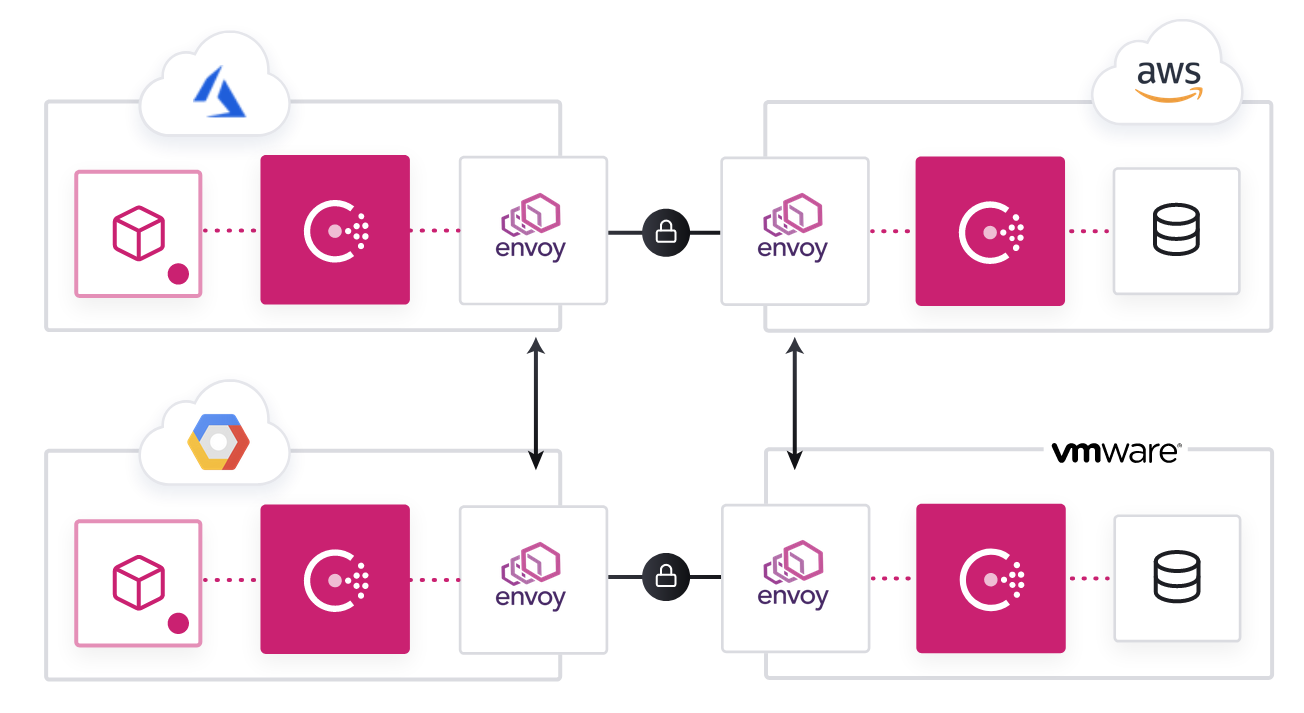

3) Consul: Consul by HashiCorp is a service mesh solution that offers a software-driven approach to routing and segmentation. It also provides additional benefits like failure handling, retries, and network observability. It provides a platform-agnostic and multi-cluster mesh with First-class Kubernetes support.

Key Features of Service Mesh:

Traffic Management:

Service Mesh traffic management divides traffic between the different subset of service instances. There are many business needs for traffic management of services beyond returning all healthy instances for load balancing.

- Canary Deployment

- Traffic splitting

- Egress Control

- Traffic mirroring

Service Resiliency:

Microservices communicates over unreliable networks and should actively be guarded against network failures to prevent further cascading failures of the entire system.

- Client-side load balancing: Service Mesh augments Kubernetes out-of-the-box load balancing.

- Circuit breaker: Instead of overwhelming the degraded service, open the circuit and reject further requests.

- Retry: If one pod returns an error (e.g., 503), retry for another pod.

- Pool ejection: This provides auto removal of error-prone pods from the load balancing pool.

- Timeout: Wait only X seconds for a response and then give up.

Chaos Engineering:

Chaos Engineering is used to experiment on a distributed microservice architecture to gain confidence within the system’s capability to resist turbulent conditions and ensure resiliency in production. Service Mesh allows developers to “inject Chaos” and test the resiliency of the microservices.

- Injecting HTTP Errors

- Injecting Delays

Observability:

One of the greatest challenges with managing a microservice’s architecture is understanding the traffic flow between the overall system’s individual services. A single end-user transaction might flow through several independently deployed microservices. Pinpointing performance bottlenecks or errors occurred and providing valuable information.

Service Mesh is a collection of proxies deployed alongside microservices. That means they “see” all the traffic that flows through them. You can use a Metrics collection and visualization toolset (Helm charts, Prometheus, and Grafana) for the following:

- Tracing

- Metrics

- Service Graph

Secure Service-to-Service Communication:

Security is paramount when it comes to inter-service communication. Service Mesh can apply security constraints across the application with zero impact on each microservice’s actual programming logic.

- mTLS: Mutual TLS is an asymmetric cryptosystem for Inter-Service communication to protect against eavesdropping, tampering, and spoofing. This makes it possible to run Service Mesh over untrusted networks.

- Role-Based Access Control: Access Control Lists (ACLs) are needed to secure UI, API, CLI, service communications, and agent communications.

Conclusion:

Service Mesh remains a critical component of cloud-native architecture. It provides the crosscutting concerns for microservice inner workings through configuration and lets the developer focus on what they should focus on: “The Business Logic.” Now the development team can meet the dual mandate of changing customer needs while offering faster development cycles.

Cloud-native architecture is a trending technology whose roadmap and landscape are changing pretty fast, and it has its learning curve and added maintenance. And, other technologies such as API Gateway and Serverless functions have overlapping capabilities with Service Mesh. Hence, we recommend a careful assessment of the use cases before implementation.