Overview of Data Quality

It is an undeniable fact that corporations and individuals today are heavily dependent on data since decisions based on the data are proven to be more successful. Organizations are investing in improving the quality of their data. Here are some of the reasons to manage Data Quality.

- Customers’ demand for better products and services.

- Find cross-sell and up-sell opportunities

- Better Return on Investment (ROI).

- Comply with legal rules and regulations.

- Compete in today’s market.

The quality of the data plays an important role in managing the business challenges and achieving desired business outcomes. For instance, it is very important to have reliable prospect and customer data to better understand prospects and customers, offer customized and value-added services and better Customer Relationship Management overall with the potential for improving revenues. Some of the customer data elements that need to be precise to achieve good business results are:

- Customer/Prospect Name

- E-mail Address

- Phone Number

- Mailing/Billing/Shipping Address

Measuring Data Quality

Data quality is measured on the following key dimensions though there are other dimensions that can be considered depending on need.

- Completeness: The data captured should be complete. Imagine the customer’s contact information such as Phone Number and Email address being unavailable .

- Conformity: Measures the amount of data that is conformed to standard data definition. For example, Canadian Postal Code should comply with “A9A 9A9” format.

- Consistency: Measure Data Consistency across applications/data stores. For example, a customer’s address in CRM should match with that of the Marketing application.

- Accuracy: This is related to the “correctness” of data. The data may be complete, conforming to a standard format and consistent in all data stores, but then it is of no use if it is not accurate/correct. For instance, the Customer’s email address should be valid and correct.

- Timeliness: Obviously, “timely” of data is as important as the other dimensions of data quality. The data should be available in a timely manner when needed, otherwise, it could lead to many issues including losing business to the competition. Imagine the effect of flight status display not providing up-to-the-minute data.

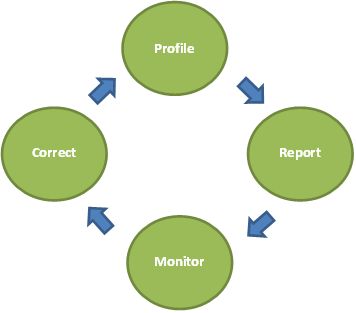

Data Quality Management Lifecycle

Data Profiling: Is the process of describing and analyzing the characteristics of data such as Data Type, Length, Distinct Values, Minimum Value, Maximum Value, Average Value, Count of Nulls, Count of values conforming to a known format, etc. The process also includes validating the data against known business/validation rules r.

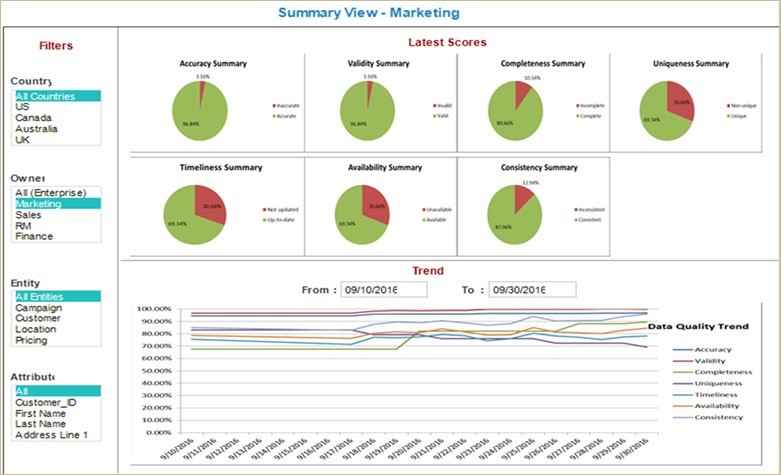

Reporting: A Scorecard should be implemented to review data quality metrics regularly. This allows identifying exceptions and the corrective actions to be taken. Data Quality Scorecard helps organizations to be more effective in understanding the status of Data Quality. The metrics can be reported at different levels (Business Unit, Location, Enterprise, etc.)

The Scorecard should be designed to be flexible, provide a summary, drill down to the lowest level, and visualize trends.

Sample scorecard.

Monitoring: Profiling the data to identify the anomalies should not be a one-time activity. Data Quality should be monitored frequently to find the gaps, investigate the root cause and fix.

Correction/Improvement: Profiling is used to find data issues, corrective measures should be implemented across different sources to fix the anomalies. Corrective actions can be implemented at source applications or at the integration points that are identified based on data profiling results. It is highly recommended to prevent the data quality issues at the source.

Data Quality Management Guidelines

- Since Organizations virtually depend on data, we need to be proactive in managing its quality and continuously improving or better yet, preventing the data quality issues at source.

- It is recommended to assess Data Quality and associate poor Data Quality with business impact (Revenue, Campaign Response, Decision-making time, Householding customers, Customer Segmentation, Quality of Service, etc.). This can be done by talking to Data Stewards and SMEs quantifying the impact and documenting SMART (Specific, Measurable, Achievable, Realistic, Time-bound) goals

- Profile the required data elements for different dimensions of data quality

- Develop and use a custom profiler (custom code) for profiling that involves complex data comparison/validation rules.

- Most of the data profiling tools have the facility to store historical profiles, however, a custom Data Mart can also be used.

- Some of the Data Quality business rules may be complex and may need to be run against significantly large volumes of data and/or compare data between heterogeneous platforms. In these cases, we can consider running the profile on a sample data (assuming the sample represents the population).

- Strongly recommend against profiling the OLTP and Operational Data Sources directly as it may impact business operations.

- Automated profiling activity should typically run during off-hours (nights, weekends, etc.)

- A BI tool of choice can be used to develop and present dashboards with snapshot of the latest scores and trends t

- Collect and store the Data Quality scores at the granular level with different dimensions (Region, LOB, Division, Domain / Subject Area, etc.) which allows the scorecards to be presented at any level

- Providing the Data Quality scores at different levels is not enough, The consumer of these reports should understand what this means to their LOB or Business Process.

- Provide Data Quality Scorecard access to different LOB Heads and have a healthy competition among them to improve Data Quality

- We highly recommend providing trend reports over a period of time so the Data Owners understand how the quality of their data is improving or declining.

- Prevention is better than cure. Prevent the Data Quality issues by implementing proper data validation checks in your applications and data integrity checks on your data sources (Format Validation, Data Lookup, Referential Integrity, Default, Not Null, Check Constraints, Unique Constraints, etc.)

- The second place where data can be corrected is at integration points or before loading the data to data warehouse.

Summary

Organizations need to maintain good quality data related to Customers, Products, Locations, Suppliers, Accounting, Inventory, etc. Data profiling aids in discovering and fixing data quality issues. There should be continuous monitoring of the data quality issues through data quality metrics. These metrics are useful in reporting the extent of data quality issues in various dimensions and help in prioritizing corrective actions. Data Quality Management is a cyclic process to profile, report, monitor and correct the data quality issues.

If you have questions about Data Quality or need help with Data Quality improvement, please engage with us via comments on this blog post or reach out to us here.