Microsoft Azure provides an extremely quick and easy platform to deploy API Apps without the end user having to worry about the infrastructure or the deployment process. This is made even simpler when using Azure DevOps.

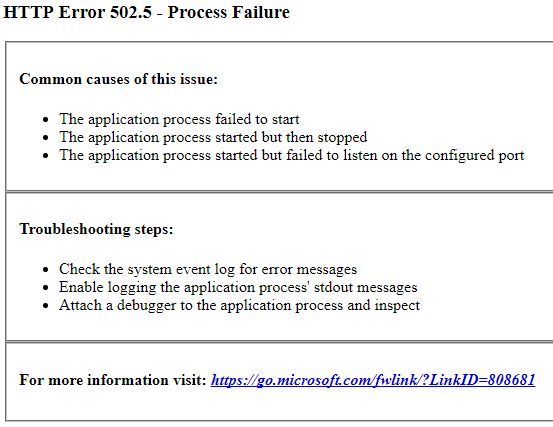

In a customer environment hosted in Azure, after a recent build and deployment of an API App using Azure DevOps, we saw the following error when trying to access to the app via the Azure-provided API endpoint.

Steps Taken to Troubleshoot the Error

As soon as the client reached out to XTIVIA, we took the following steps to troubleshoot the issue.

Increase Log Levels

As a general practice, with any application troubleshooting, the first step is to enable logs for the application and increase the log levels to debug for both the Application logs and the WebServer logs.

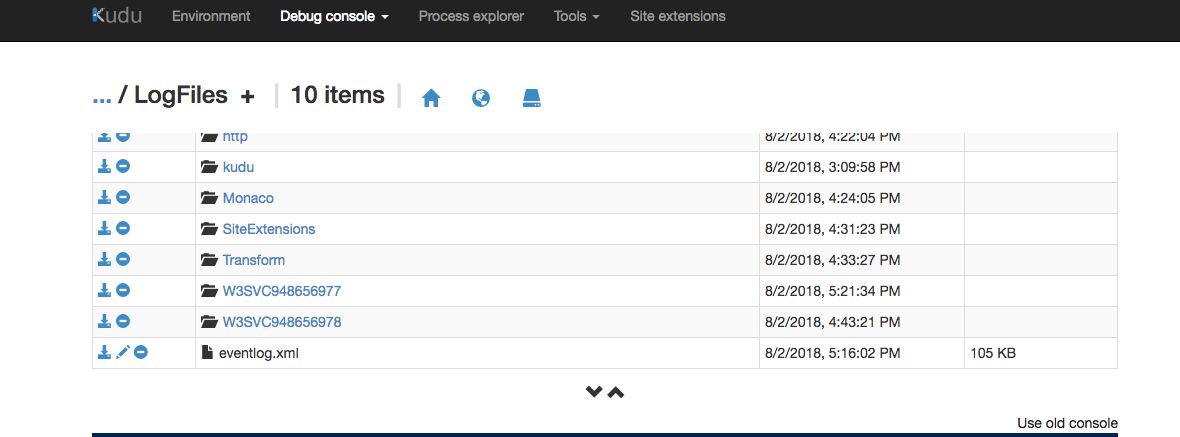

Enable Kudu and View the Logs

In Azure API App settings, enable KUDU to browse the application and view the logs. Once enabled, KUDU provides a very intuitive UI to browse the application directory structure and the logs.

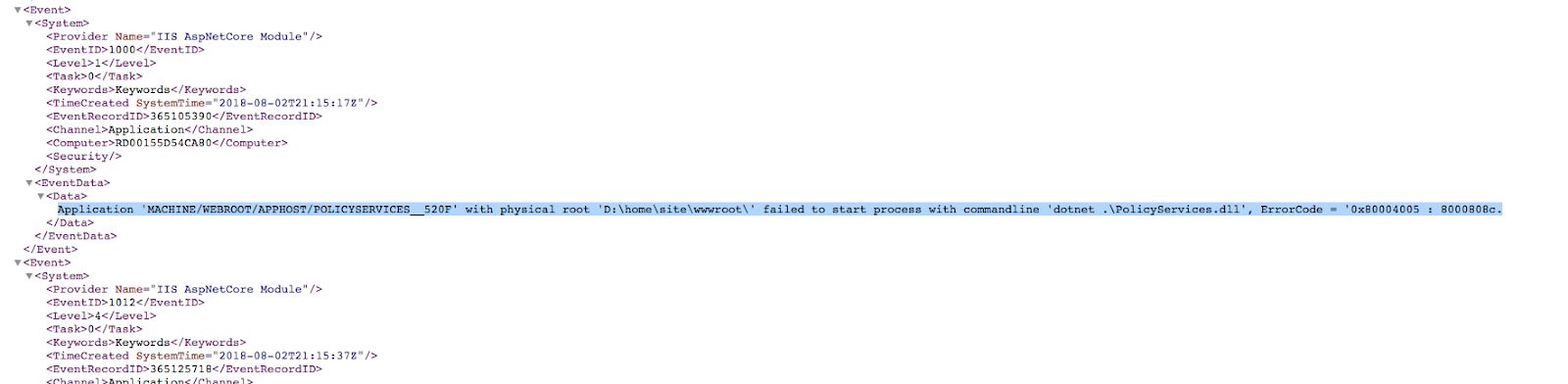

In the application’s event log, the following line gave more insight into the cause of the error

The error text “Failed to start process with commandline 'dotnet .\PolicyServices.dll' pointed to an issue with the dotnet code.

Azure CLI to the rescue

As a next step, we used the built-in Azure-CLI in the API App dashboard to navigate to the root of the application and tried to startup the API App using dotnet .\PolicyServices.dll command.

Running the command threw an error saying that netcoreapp2.1 was not compatible with the version of .NET core provided by Azure API App service. This pointed to an issue with the package configuration itself.

Code Review

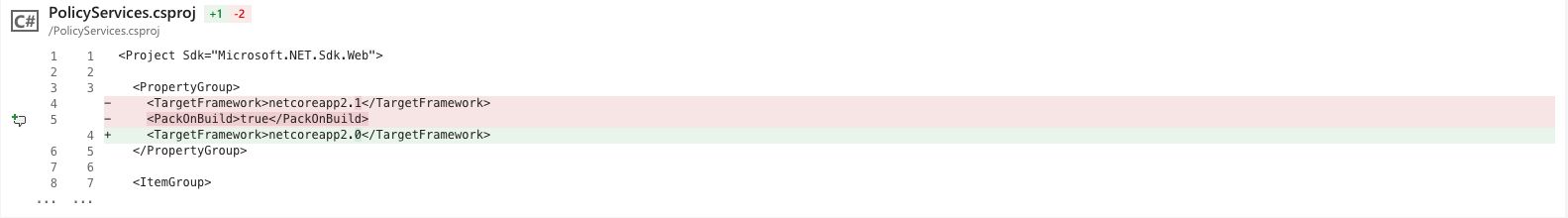

During a code review session, we found that a member of the development team had updated the Target Framework section of the API App csproj file to netcoreapp2.1 instead of the default netcoreapp2.0. He had done this in order to make the app deploy successfully in his local machine that had the updated netcoreapp2.1 installed.

Reverting this change triggered the automated Continuous Integration Pipeline and fixed the issue with the deployment.

Lessons Learned

While the issue was minor, here are a few recommended steps we always ask the clients to follow to help them have less downtime.

Establish Code Reviews

We understand that code-reviews are cumbersome. However, for an uninterrupted Production uptime, code reviews need to be established in all environments, especially in an environment where you have Continuous Integration and Continuous Delivery pipelines established.

Use Staging Slots

Azure provides a feature for Staging the Application deployment before it is made live. You can create multiple staging slots for an App. Creating multiple staging slots allows the team to perform testing before the staging slot is swapped with the live Production slot. If issues are found in the live environment even after testing, the slots can be swapped almost immediately, reducing the down-times.

Summary

With Microsoft Azure’s App Service deployments, organizations don’t have to worry about the underlying infrastructure. Azure also provides mechanisms around mitigating service loss in Production and Production-like environments. It is very highly recommended to use them to improve customer experience.

If you have questions on how you can best leverage our skill set and/or need help with your Liferay DXP implementation, please engage with us via comments on this blog post, or reach out to us.

Additional Reading

You can also continue to explore various topics such as Serverless and PaaS, FaaS, SaaS: Same, Similar or Not Even Close?, Top 5 DevOps Features in Liferay DXP (DevOps perspective), Liferay DXP Apache HTTPD Health Checks